Hello folks, good to see you again! In the previous part, we understood what is dynamic rendering and the need for its implementation. In this article, we’ll be going over all the solutions. Finding and implementing the best one for our requirements.

https://unsplash.com/photos/oQvESMKUkzM

https://unsplash.com/photos/oQvESMKUkzM1) Solutions to offer

Let’s explore the most famous solutions for performing dynamic rendering. At 1mg we follow an isomorphic architecture with both route level and component level chunking.So, these solutions won’t actually work for us. But they can actually come in handy for your architecture.So let’s jump right into it!

a) Puppeteer

Using this, we can launch an instance of a headless chrome browser and provide the URL of our web page. Now at this point, we get multiple options. Either take a snapshot or save the page as a pdf version.This can be a hitch as spawning a new headless chrome instance in the production can be time-consuming and CPU heavy.Ideal Use Case: Unit test cases, as taking snapshots and saving pdfs can be more helpful for that and Google promotes it too. Although we can use it for SSR or for serving bots it doesn't have prebuilt caching support so you’ll need to spawn something of your own.Quick start | Tools for Web Developers | Google Developers

b) Rendertron

This is similar to puppeteer in a way that it also spawns a new instance of headless chrome. “Rendertron” gives you a cached version of your generated static HTML.This static HTML can definitely be used as a Server-side rendered static version of your Client-side rendered dynamic page. You can directly serve the page to your bots.Again even Google doesn’t promote using this production. If you have a huge application with a ton of URLs, Rendertron will be really a very slow option and might not be even a feasible solution if the content is updated too frequently.Ideal Use Case: If you have a Client-side rendered application with no SSR support. In this case, you’ll be serving a blank HTML to the bots so rather than doing that, Rendertron can be a great option. It’ll take away the implementation of SSR and serving static HTML to the bots.Dynamic Rendering with Rendertron | Google Search Central Blog

c) Pre-renderer

Now, this can be a great solution if you have an application with less than 250 pages. So that'll be free for you.As per the documentation, this handles most of the use cases but, for a large application you have to pay on a monthly basis. Following is the pricing for the same:Prerender - Dynamic Rendering for Effective JavaScript SEO | PrerenderNow without any further due let’s jump right into our own custom-built solution.

d) Our Custom Solution

Here is the stepwise process that we’ll follow to implement the solution:

- Identify the request is coming from a bot.

- Dynamically change the importing behaviour of all the component chunks. From Client Side Rendering they’ll be changed to Server Side Rendering.

- This will achieve with the help of redux state and changing how we import our components.

- Then we’ll finally we’ll remove any JS, CSS or 3rd party scripts that aren’t required.

Folks who don’t have much idea around component-based splitting using @loadable/component please check out my previous article before proceeding further.

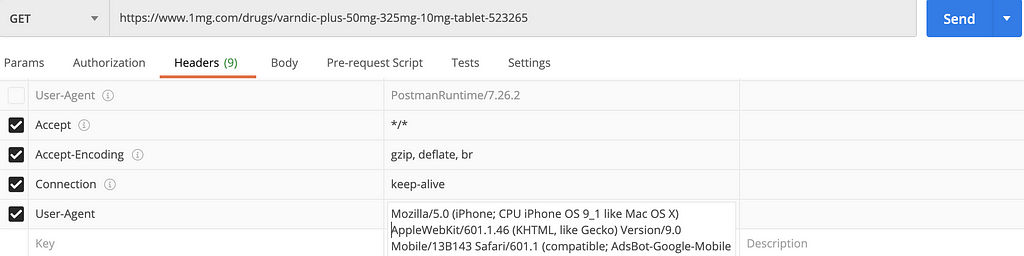

2) Request Identification- user agents

To start with the implementation of our own solution we’ll need to identify where exactly the requests are coming from (Bots vs Normal User).

Identifying user agents is the best way to do that. Every bot has a unique string that is sent in the “user-agent” header with each request made for crawling. Here is how it goes:a) The request is captured on the server along with all the request headers.b) We extract the “user-agent” header out of those which is in a string format.c) Then “user-agent” can be further matched with a predefined list of bots we want to cater to.Following is a list of google bots and their user agents:Overview of Google crawlers (user agents) | Google Search Central

3) Changing the Component Imports

Now we’ll be making the following changes to our component imports to ensure this solution can work:

- Instead of importing our components outside the main function on the top, we’ll import them inside the main function.

- The main function will be the function getting exported(of functional components/Hooks) or the render method(Class-based components).

- Now all the components should have an additional property “SSR”.

- Its value will change dynamically based on the redux state which we’ll set on the server.

- This state will change as per the “user-agent” header in the request.

4) Using redux to dynamically change the render state

Now we’ll need to set a state as “IS_BOT” on the server depending upon the request’s “user-agent”.

We can add more google bots and user agents as per the requirement.

Yes, this is it!

5) Verifying our Implementation

To verify that are we getting our different HTML files for our user agents or not, we can use a simple tool “postman”. By using this we can add a custom header.

In our case, we’ll add a user agent for google bot and a normal user and observe how the content changes in both scenarios.

6) Final words

To sum up this article, I’d like to say when it comes to SEO, it’s essential for us to serve a contentful static HTML that is lightweight. At the same time, to meet the performance needs of the end-user we’ll need to set up a Dynamic Rendering architecture.Now we can choose between the pre-built solutions or come up with our own solution for our requirements.I hope you enjoyed this blog!

Do share your feedback and suggestions in the comments section below. Or, if you just want to talk shop, feel free to reach out to me on LinkedIn and Twitter.

If you liked this blog, please hit the 👏 . Stay tuned for the next one!

Web Performance and SEO: Dynamic Rendering (Part-2) was originally published in Tata 1mg Engineering on Medium, where people are continuing the conversation by highlighting and responding to this story.

- Log in to post comments

- 2 views